With multiple data sets available on Dewey, matching those with other datasets is one of the issues. Typically many data come with the street address and/or geocode (lat, long). I have tried Placekey (placekey.io), geocode matching, and fuzzy matching. Starting from Placekey, since some datasets like Advan patterns includes it, I am going to compare them.

First, you can obtain Placekey API from Placekey | Solve Address Matching Problems with a Free Universal Location ID. They provide wonderful documentation on their API. Once you have a key, then you can use the following R codes to generate Placekeys.

library(httr);

library(rjson);

library(jsonlite);

library(rstudioapi);

library(plyr);

PLACEKEY_URL = "https://api.placekey.io/v1/placekey";

PLACEKEYS_URL = "https://api.placekey.io/v1/placekeys";

placekey_list_to_df = function(x) {

# x = res_con[[2]]

qid = x$query_id;

pk = x$placekey;

if(is.null(pk)) {

pk = NA;

}

er = x$error;

if(is.null(er)) {

er = NA;

}

return(data.frame(query_id = qid, placekey = pk,error = er));

}

# get multiple Placekeys

get_placekeys_single_batch = function(apikey, location, query_id = NULL) {

# location = data.frame(rhub[1:5, c("address", "city", "state", "zip")],iso_country_code = "US")

# location = sub_loc

# query_id = sub_query_id

if(is.null(query_id)) {

query_id = paste0("", 1:nrow(location));

}

loc_df = data.frame(query_id = query_id, as.data.frame(location));

if(ncol(location) == 2) {

colnames(loc_df) = c("query_id",

"latitude", "longitude")

} else if(ncol(location) == 5) {

colnames(loc_df) = c("query_id",

"street_address", "city",

"region", "postal_code", "iso_country_code")

} else if(ncol(location) == 6) {

colnames(loc_df) = c("query_id",

"location_name",

"street_address", "city",

"region", "postal_code", "iso_country_code")

} else {

print("check your location data.")

return (NULL);

}

loc_df_json_str = toJSON(loc_df);

body_str = paste0('{"queries": ', loc_df_json_str, '}');

response = POST(PLACEKEYS_URL,

add_headers(.headers = c("apikey"=apikey,

"Content-Type"="application/json")),

body = body_str);

if(response$status_code != 200) {

print(paste0("Error with status code ", response$status_code));

print(content(response));

return(NULL);

}

res_con = content(response);

if(length(res_con) > 0) {

res_con_regular = lapply(res_con, placekey_list_to_df);

res_df = do.call(rbind.data.frame, res_con_regular);

# bb = ldply(res_con, data.frame)

return(res_df);

} else {

return (NULL);

}

}

get_placekeys = function(apikey, location, query_id = NULL, query_limit = 1000) {

# location = data.frame(rhub[, c("address", "city", "state", "zip")], iso_country_code = "US")

n_row = nrow(location);

n_queries = ceiling(n_row/query_limit);

if(is.null(query_id)) {

query_id = paste0("", 1:n_row);

}

placekeys_buff = NULL;

for(n_query in 1:n_queries) {

print(paste0("Querying batch number ", n_query, "/", n_queries))

beg_index = 1 + (n_query-1) * query_limit;

end_index = n_query * query_limit;

if(end_index > n_row) {

end_index = n_row

}

sub_query_id = query_id[beg_index:end_index];

sub_loc = location[beg_index:end_index, ];

placekeys_df = get_placekeys_single_batch(apikey, sub_loc, sub_query_id);

if(is.null(placekeys_df)) {

return(NULL);

}

placekeys_buff = rbind(placekeys_buff, placekeys_df)

}

return(placekeys_buff);

}

There are two major functions you can use

get_placekeys_single_batch and get_placekeys.

Both functions generate Placekeys for multiple locations. However, Placekey limits the number of locations per query to 100ish (I could generate more than that, though, but there is definitely a limit.) So, if your data has fewer than that limit, you can use get_placekeys_single_batch. If you have more data points (most likely), then use get_placekeys. get_placekeys simply splits location data and call get_placekeys_single_batch multiple times.

get_placekeys parameters:

apikey: your API key in string

location: data.frame of your location (address or geocode)

query_id: custom name of each of your locations. Default value is NULL and if that is the case, query_id will be integer numbers starting from 1.

query_limit: number of locations per query. Default is 1000. The 1000 worked for me most of the cases, but if you encounter errors, reduce it to 200 or fewer.

Here are some examples.

You can pass (lat, long) geocode. You can pass multiple locations in a data.frame.

get_placekeys_single_batch(apikey, data.frame(lat = 37.69640, long = -122.4804))

You can pass street address.

# McDonalds in NY

df1 = data.frame(location_name = NA,

address= "541 6th Ave",

city = "New York",

state = "NY", zip ="10011",

iso_country_code = "US")

# McDonalds in NY with location_name

df11 = data.frame(location_name = "McDonald's",

address= "541 6th Ave",

city = "New York",

state = "NY", zip ="10011",

iso_country_code = "US")

# McDonalds in NY with location_name left out.

df12 = data.frame(address= "541 6th Ave",

city = "New York",

state = "NY", zip ="10011",

iso_country_code = "US")

df1, df11 and df12 contain the address for McDonalds in NY. Columns should be in the order of location_name, address, city, state and iso_country_code. You can name the columns as you like as far as the order is correct. In df1, location_name is set to NA, in df11 location_name is given and df12 location_name is omitted, which is fine. Most of the cases, data comes without location name and df12 will be most common practice. Let’s get Placekey for df1 (without location_name).

get_placekeys_single_batch(apikey, df1);

# or

get_placekeys(apikey, df1);

Then, with location name

get_placekeys_single_batch(apikey, df11);

Same as before! This potentially means Placekey does not have the business name for this location yet.

It runs the same when location_name is totally left out from the query.

get_placekeys_single_batch(apikey, df12);

Let’s try another McDonald’s in CA without and with location_name. First, without location_name

get_placekeys_single_batch(apikey, df2);

and with location_name

get_placekeys_single_batch(apikey, df21);

Now it has different Placekyes! With business names, we can get more precise Placekey. I will discuss this further later but many datasets do not have business names, unfortunately.

Keep moving on to the df3, which does not exist in Placekey, here is the result.

get_placekeys_single_batch(apikey, df3);

It prints an error message from the server. Finally, df4, which contains all of the above addresses,

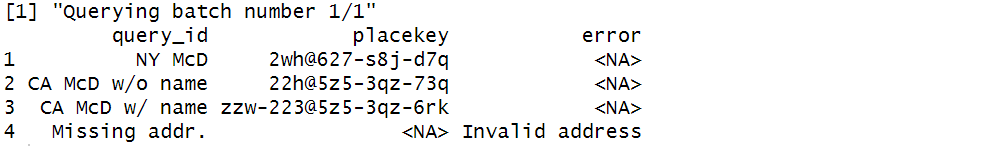

query_id = c("NY McD", "CA McD w/o name", "CA McD w/ name", "Missing addr.")

get_placekeys(apikey, df4, query_id)

The last column of output is “error” message, and the 4th one has an “Invalid address” error.

If you want to match the dataset using Placekey, you will generate Placekey for your datasets and compare them.

In the next post, I will move on to geocode option.

Donn